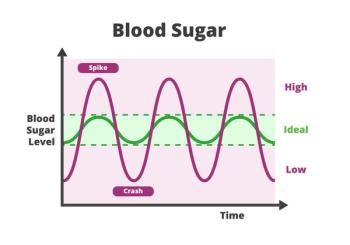

Despite decades of major monetary investment for applied research in multiple spectroscopic sensing technologies, achieving an accurate, portable, and painless noninvasive glucose monitor remains a major unmet goal in diabetes care. This goal is extremely difficult due to persistent challenges with sensitivity, analyte specificity, accuracy, calibration stability, and biological interference.